The truth about what happened Aug 22 to the Nasdaq is that new limit-up/limit-down rules took effect in derivatives (exchange-traded products) listed at Arca at the same time that new options began trading marketwide that day. Since the market is full of complex, multi-leg trades, bad data propagated, affecting Goldman’s options-trading algorithms Tuesday, spawning hundreds of derivatives trading halts by VIX expirations Wednesday, and producing bad data in the consolidated tape by Thur, halting Nasdaq trading. So the real culprit was the SEC. But it’s bad form to say publicly that the regulator is responsible for jeopardizing the market.I can't vouch for the story, or even for understanding it all. But I'm interested in several emerging stories that some trading pathologies are in part unintended consequences of SEC regulation. It's also not the first time I hear of financial market participants afraid to speak out and earn the disfavor of their regulator.

Showing posts with label Finance. Show all posts

Showing posts with label Finance. Show all posts

Wednesday, August 28, 2013

Nasdaq freeze

An anonymous correspondent explained last week's Nasdaq freeze thus.

Monday, August 26, 2013

Macro-prudential policy

| Source: Wall Street Journal |

Interest rates make the headlines, but the Federal Reserve's most important role is going to be the gargantuan systemic financial regulator. The really big question is whether and how the Fed will pursue a "macroprudential" policy. This is the emerging notion that central banks should intensively monitor the whole financial system and actively intervene in a broad range of markets toward a wide range of goals including financial and economic stability.

For example, the Fed is urged to spot developing "bubbles," "speculative excesses" and "overheated" markets, and then stop them—as Fed Governor Sarah Bloom Raskin explained in a speech last month, by "restraining financial institutions from excessively extending credit." How? "Some of the significant regulatory tools for addressing asset bubbles—both those in widespread use and those on the frontier of regulatory thought—are capital regulation, liquidity regulation, regulation of margins and haircuts in securities funding transactions, and restrictions on credit underwriting."

This is not traditional regulation—stable, predictable rules that financial institutions live by to reduce the chance and severity of financial crises. It is active, discretionary micromanagement of the whole financial system. A firm's managers may follow all the rules but still be told how to conduct their business, whenever the Fed thinks the firm's customers are contributing to booms or busts the Fed disapproves of.

Macroprudential policy explicitly mixes the Fed's macroeconomic and financial stability roles. Interest-rate policy will be used to manipulate a broad array of asset prices, and financial regulation will be used to stimulate or cool the economy.

Foreign central banks are at it already, and a growing consensus among international policy types has left the Fed's relatively muted discussions behind. The sweeping agenda laid out in "Macroprudential Policy: An Organizing Framework," a March 2011 International Monetary Fund paper, is a case in point.

"The monitoring of systemic risks by macroprudential policy should be comprehensive," the IMF paper explains. "It should cover all potential sources of such risk no matter where they reside." Chillingly, policy "should be able to encompass all important providers of credit, liquidity, and maturity transformation regardless of their legal form, as well as individual systemically important institutions and financial market infrastructures."

What could possibly go wrong?

It's easy enough to point out that central banks don't have a great track record of diagnosing what they later considered "bubbles" and "systemic" risks. The Fed didn't act on the tech bubble of the 1990s or the real-estate bubble of the last decade. European bank regulators didn't notice that sovereign debts might pose a problem. Also, during the housing boom, regulators pressured banks to lend in depressed areas and to less creditworthy customers. That didn't pan out so well.

More deeply, the hard-won lessons of monetary policy apply with even greater force to the "macroprudential" project.

First lesson: Humility. Fine-tuning a poorly understood system goes quickly awry. The science of "bubble" management is, so far, imaginary.

Consider the idea that low interest rates spark asset-price "bubbles." Standard economics denies this connection; the level of interest rates and risk premiums are separate phenomena. Historically, risk premiums have been high in recessions, when interest rates have been low.

One needs to imagine a litany of "frictions," induced by institutional imperfections or current regulations, to connect the two. Fed Governor Jeremy Stein gave a thoughtful speech in February about how such frictions might work, but admitting our lack of real knowledge deeper than academic cocktail-party speculation.

Based on this much understanding, is the Fed ready to manage bubbles by varying interest rates? Mr. Stein thinks so, arguing that "in an environment of significant uncertainty . . . standards of evidence should be calibrated accordingly," i.e., down. The Fed, he says, "should not wait for "decisive proof of market overheating." He wants "greater overlap in the goals of monetary policy and regulation." The history of fine-tuning disagrees. And once the Fed understands market imperfections, perhaps it should work to remove them, not exploit them for price manipulation.

Second lesson: Follow rules. Monetary policy works a lot better when it is transparent, predictable and keeps to well-established traditions and limitations, than if the Fed shoots from the hip following the passions of the day. The economy does not react mechanically to policy but feeds on expectations and moral hazards. The Fed sneezed that bond buying might not last forever and markets swooned. As it comes to examine every market and targets every single asset price, the Fed can induce wild instability as markets guess the next anti-bubble decree.

Third lesson: Limited power is the price of political independence. Once the Fed manipulates prices and credit flows throughout the financial system, it will be whipsawed by interest groups and their representatives.

How will home builders react if the Fed decides their investments are bubbly and restricts their credit? How will bankers who followed all the rules feel when the Fed decrees their actions a "systemic" threat? How will financial entrepreneurs in the shadow banking system, peer-to-peer lending innovators, etc., feel when the Fed quashes their efforts to compete with banks?

Will not all of these people call their lobbyists, congressmen and administration contacts, and demand change? Will not people who profit from Fed interventions do the same? Willy-nilly financial dirigisme will inevitably lead to politicization, cronyism, a sclerotic, uncompetitive financial system and political oversight. Meanwhile, increasing moral hazard and a greater conflagration are sure to follow when the Fed misdiagnoses the next crisis.

The U.S. experienced a financial crisis just a few years ago. Doesn't the country need the Fed to stop another one? Yes, but not this way. Instead, we need a robust financial system that can tolerate "bubbles" without causing "systemic" crises. Sharply limiting run-prone, short-term debt is a much easier project than defining, diagnosing and stopping "bubbles." [For more, see this previous post] That project is a hopeless quest, dripping with the unanticipated consequences of all grandiose planning schemes.

In the current debate over who will be the next Fed chair, we should not look for a soothsayer who will clairvoyantly spot trouble brewing, and then direct the tiniest details of financial flows. Rather, we need a human who can foresee the future no better than you and I, who will build a robust financial system as a regulator with predictable and limited powers.

*****

Bonus extras. A few of many delicious paragraphs cut for space.

Do not count on the Fed to voluntarily limit its bubble-popping, crisis management, or regulator discretion. Vast new powers come at an institutionally convenient moment. It’s increasingly obvious how powerless conventional monetary policy is. Four and a half percent of the population stopped working between 2008 and 2010, and the ratio has not budged since. GDP fell seven and a half percent below “potential,” in 2009 and is still six points below. Two trillion didn’t dent the thing, and surely another two wouldn’t make a difference either. How delicious, in the name of “systemic stability,” to just step in and tell the darn banks what to do, and be important again!

Ben Bernanke on macroprudential policy:

For example, a traditional microprudential examination might find that an individual financial institution is relying heavily on short-term wholesale funding, which may or may not induce a supervisory response. The implications of that finding for the stability of the broader system, however, cannot be determined without knowing what is happening outside that particular firm. Are other, similar financial firms also highly reliant on short-term funding? If so, are the sources of short-term funding heavily concentrated? Is the market for short-term funding likely to be stable in a period of high uncertainty, or is it vulnerable to runs? If short-term funding were suddenly to become unavailable, how would the borrowing firms react--for example, would they be forced into a fire sale of assets, which itself could be destabilizing, or would they cease to provide funding or critical services for other financial actors? Finally, what implications would these developments have for the broader economy? ...As if in our lifetimes anyone will have precise answers to questions like these. In case you didn't get the warning,

... And the remedies that might emerge from such an analysis could well be more far-reaching and more structural in nature than simply requiring a few firms to modify their funding patterns.A big thank you to my editor at WSJ, Howard Dickman, who did more than the usual pruning of prose and asking tough questions.

Friday, August 23, 2013

MOOC

I will be running a MOOC (massively online) class this fall. Follow the link for information. The class will roughly parallel my PhD asset pricing class. We'll run through most of the "Asset Pricing" textbook. The videos are all shot, now I'm putting together quizzes... which accounts for some of my recent blog silence.

So, if you're interested in the theory of academic asset pricing, or you've wanted to work through the book, here's your chance. It's designed for PhD students, aspiring PhD students, advanced MBAs, financial engineers, people who are working in industry who might like to study PhD level finance but don't have the time, and so on. It's not easy, we start with a stochastic calculus review! But I'm emphasizing the intuition, what the models mean, why we use them, and so on, over the mathematics.

So, if you're interested in the theory of academic asset pricing, or you've wanted to work through the book, here's your chance. It's designed for PhD students, aspiring PhD students, advanced MBAs, financial engineers, people who are working in industry who might like to study PhD level finance but don't have the time, and so on. It's not easy, we start with a stochastic calculus review! But I'm emphasizing the intuition, what the models mean, why we use them, and so on, over the mathematics.

Labels:

Economists,

Finance,

Thesis topics

Tuesday, July 30, 2013

On Au

Greg Mankiw has a cool New York Times article and blog post, "On Au" analyzing the case to be made for gold in a portfolio, including a cute problem set. (Picture at left from Greg's website. I need to get Sally painting some gold pictures!)

I think Greg made two basic mistakes in analysis.

First, he assumed that returns (gold, bonds, stocks) are independent over time, so that one-period mean-variance analysis is the appropriate way to look at investments. Such analysis already makes it hard to understand why people hold so many long-term bonds. They don't earn much more than short term bonds, and have a lot more variance. But long-term bonds have a magic property: When the price goes down -- bad return today -- the yield goes up -- better returns tomorrow. Thus, because of their dynamic property (negative autocorrelation), long term bonds are risk free to long term investors even though their short-term mean-variance properties look awful.

Gold likely has a similar profile. Gold prices go up and down in the short run. But relative prices mean-revert in the long run, so the long run risk and short run risk are likely quite different.

Second, deeper, Greg forgot the average investor theorem. The average investor holds the value-weighted portfolio of all assets. And all deviations from market weights are a zero sum game. I can only earn positive alpha if someone else earns negative alpha. That's not a theorem, it's an identity. You should only hold something different than market weights if you are identifiably different than the market average investor. If, for example, you are a tenured professor, then your income stream is less sensitive to stock market fluctuations than other people, and that might bias you toward more stocks.

So, how does Greg analyze the demand for gold, and decide if he should hold more or less than market average weights? With mean-variance analysis. That's an instance of the answer, "I diverge from market weights because I'm smarter and better informed than the average investor." Now Greg surely is smarter than the average investor. But everyone else thinks they're smarter than average, and half of them are deluded.

In any case, Greg isn't smarter because he knows mean-variance analysis. In fact, sadly, the opposite is true. The first problem set you do in any MBA class (well, mine!) makes clear that plugging historical means and variance into a mean-variance optimizer and implementing its portfolio advice is a terrible guide to investing. Practically anything does better. 1/N does better. Means and variances are poorly estimated (Greg, how about a standard error?) and the calculation is quite unstable to inputs.

In any case, Greg shouldn't have phrased the question, "how much gold should I hold according to mean variance analysis, presuming I'm smarter than everyone else and can profit at their expense by looking in this crystal ball?" He should have phrased the question, "how much more or less than the market average should I hold?" And "what makes me different from average to do it?"

That's especially true of a New York Times op-ed, which offers investment advice to everyone. By definition, we can't all hold more or less gold than average! If you offer advice that A should buy, and hold more than average, you need to offer advice that B should sell, and hold less than average.

I don't come down to a substantially different answer though. As Greg points out, gold is a tiny fraction of wealth. So it should be at most a tiny fraction of a portfolio.

There is all this bit about gold, guns, ammo and cans of beans. If you think about gold that way, you're thinking about gold as an out of the money put option on calamitous social disruption, including destruction of the entire financial and monetary system. That might justify a different answer. And it makes a bit of sense why gold prices are up while TIPS indicate little expected inflation. But you don't value such options by one-period means and variances. And you still have to think why this option is more valuable to you than it is to everyone else.

I think Greg made two basic mistakes in analysis.

First, he assumed that returns (gold, bonds, stocks) are independent over time, so that one-period mean-variance analysis is the appropriate way to look at investments. Such analysis already makes it hard to understand why people hold so many long-term bonds. They don't earn much more than short term bonds, and have a lot more variance. But long-term bonds have a magic property: When the price goes down -- bad return today -- the yield goes up -- better returns tomorrow. Thus, because of their dynamic property (negative autocorrelation), long term bonds are risk free to long term investors even though their short-term mean-variance properties look awful.

Gold likely has a similar profile. Gold prices go up and down in the short run. But relative prices mean-revert in the long run, so the long run risk and short run risk are likely quite different.

Second, deeper, Greg forgot the average investor theorem. The average investor holds the value-weighted portfolio of all assets. And all deviations from market weights are a zero sum game. I can only earn positive alpha if someone else earns negative alpha. That's not a theorem, it's an identity. You should only hold something different than market weights if you are identifiably different than the market average investor. If, for example, you are a tenured professor, then your income stream is less sensitive to stock market fluctuations than other people, and that might bias you toward more stocks.

So, how does Greg analyze the demand for gold, and decide if he should hold more or less than market average weights? With mean-variance analysis. That's an instance of the answer, "I diverge from market weights because I'm smarter and better informed than the average investor." Now Greg surely is smarter than the average investor. But everyone else thinks they're smarter than average, and half of them are deluded.

In any case, Greg isn't smarter because he knows mean-variance analysis. In fact, sadly, the opposite is true. The first problem set you do in any MBA class (well, mine!) makes clear that plugging historical means and variance into a mean-variance optimizer and implementing its portfolio advice is a terrible guide to investing. Practically anything does better. 1/N does better. Means and variances are poorly estimated (Greg, how about a standard error?) and the calculation is quite unstable to inputs.

In any case, Greg shouldn't have phrased the question, "how much gold should I hold according to mean variance analysis, presuming I'm smarter than everyone else and can profit at their expense by looking in this crystal ball?" He should have phrased the question, "how much more or less than the market average should I hold?" And "what makes me different from average to do it?"

That's especially true of a New York Times op-ed, which offers investment advice to everyone. By definition, we can't all hold more or less gold than average! If you offer advice that A should buy, and hold more than average, you need to offer advice that B should sell, and hold less than average.

I don't come down to a substantially different answer though. As Greg points out, gold is a tiny fraction of wealth. So it should be at most a tiny fraction of a portfolio.

There is all this bit about gold, guns, ammo and cans of beans. If you think about gold that way, you're thinking about gold as an out of the money put option on calamitous social disruption, including destruction of the entire financial and monetary system. That might justify a different answer. And it makes a bit of sense why gold prices are up while TIPS indicate little expected inflation. But you don't value such options by one-period means and variances. And you still have to think why this option is more valuable to you than it is to everyone else.

Labels:

Commentary,

Finance

Monday, July 22, 2013

Are we prepared for the next financial crisis?

This is the title of a very well-prepared video made by Hal Weitzman, Dustin Whitehead and the Booth "Capital Ideas" team, based on interviews with many of our faculty. Direct link here (Youtube)

Sunday, June 23, 2013

Stopping Bank Crises Before They Start

This is a Wall Street Journal Oped 6/24/2013

Regulating the riskiness of bank assets is a dead end. Instead, fix the run-prone nature of bank liabilities.

In recent months the realization has sunk in across the country that the 2010 Dodd-Frank financial-reform legislation is a colossal mess. Yet we obviously can't go back to the status quo that produced a financial catastrophe in 2007-08. Fortunately, there is an alternative.

At its core, the recent financial crisis was a run. The run was concentrated in the "shadow banking system" of overnight repurchase agreements, asset-backed securities, broker-dealers and investment banks, but it was a classic run nonetheless.

The run made the crisis. In the 2000 tech bust, people lost a lot of money, but there was no crisis. Why not? Because tech firms were funded by stock. When stock values fall you can't run to get your money out first, and you can't take a company to bankruptcy court.

This is a vital and liberating insight: To stop future crises, the financial system needs to be reformed so that it is not prone to runs. Americans do not have to trust newly wise regulators to fix Fannie Mae and Freddie Mac, end rating-agency shenanigans, clairvoyantly spot and prick "bubbles," and address every other real or perceived shortcoming of our financial system.

Runs are a pathology of financial contracts, such as bank deposits, that promise investors a fixed amount of money and the right to withdraw that amount at any time. A run also requires that the issuing institution can't raise cash by selling assets, borrowing or issuing equity. If I see you taking your money out, then I have an incentive to take my money out too. When a run at one institution causes people to question the finances of others, the run becomes "systemic," which is practically the definition of a crisis.

By the time they failed in 2008, Lehman Brothers and Bear Stearns were funding portfolios of mortgage-backed securities with overnight debt leveraged 30 to 1. For each $1 of equity capital, the banks borrowed $30. Then, every single day, they had to borrow 30 new dollars to pay off the previous day's loans.

When investors sniffed trouble, they refused to roll over the loans. The bank's broker-dealer customers and derivatives counterparties also pulled their money out, each also having the right to money immediately, but each contract also serving as a source of short-term funding for the banks. When this short-term funding evaporated, the banks instantly failed.

Clearly, overnight debt is the problem. The solution is just as clear: Don't let financial institutions issue run-prone liabilities. Run-prone contracts generate an externality, like pollution, and merit severe regulation on that basis.

Institutions that want to take deposits, borrow overnight, issue fixed-value money-market shares or any similar runnable contract must back those liabilities 100% by short-term Treasurys or reserves at the Fed. Institutions that want to invest in risky or illiquid assets, like loans or mortgage-backed securities, have to fund those investments with equity and long-term debt. Then they can invest as they please, as their problems cannot start a crisis.

Money-market funds that want to offer better returns by investing in riskier securities must let their values float, rather than promise a fixed value of $1 per share. Mortgage-backed securities also belong in floating-value funds, like equity mutual funds or exchange-traded funds. The run-prone nature of broker-dealer and derivatives contracts can also be reformed at small cost by fixing the terms of those contracts and their treatment in bankruptcy.

The bottom line: People who want better returns must transparently shoulder additional risk.

Some people will argue: Don't we need banks to "transform maturity" and provide abundant "safe and liquid" assets for people to invest in? Not anymore.

First, $16 trillion of government debt is enough to back any conceivable demand for fixed-value liquid assets. Money-market funds that hold Treasurys can expand to enormous size. The Federal Reserve should continue to provide abundant reserves to banks, paying market interest. The Treasury could offer reserves to the rest of us—floating-rate, fixed-value, electronically-transferable debt. There is no reason that the Fed and Treasury should artificially starve the economy of completely safe, interest-paying cash.

Second, financial and technical innovations can deliver the liquidity that once only banks could provide. Today, you can pay your monthly credit-card bill from your exchange-traded stock fund. Tomorrow, your ATM could sell $100 of that fund if you want cash, or you could bump your smartphone on a cash register to buy coffee with that fund. Liquidity no longer requires that anyone hold risk-free or fixed-value assets.

Others will object: Won't eliminating short-term funding for long-term investments drive up rates for borrowers? Not much. Floating-value investments such as equity and long-term debt that go unlevered into loans are very safe and need to pay correspondingly low returns. If borrowers pay a bit more than now, it is only because banks lose their government guarantees and subsidies.

In the 19th century, private banks issued currency. A few crises later, we stopped that and gave the federal government a monopoly on currency issue. Now that short-term debt is our money, we should treat it the same way, and for exactly the same reasons.

In the wake of Great Depression bank runs, the U.S. government chose to guarantee bank deposits, so that people no longer had the incentive to get out first. But guaranteeing a bank's deposits gives bank managers a huge incentive to take risks.

So we tried to regulate the banks from taking risks. The banks got around the regulations, and "shadow banks" grew around the regulated system. Since then we have been on a treadmill of ever-larger bailouts, ever-expanding government guarantees, ever-expanding attempts to regulate risks, ever-more powerful regulators and ever-larger crises.

This approach will never work. Rather than try to regulate the riskiness of bank assets, we should fix the run-prone nature of their liabilities. Fortunately, modern financial technology surmounts the economic obstacles that impeded this approach in the 1930s. Now we only have to surmount the obstacle of entrenched interests that profit from the current dysfunctional system.

Regulating the riskiness of bank assets is a dead end. Instead, fix the run-prone nature of bank liabilities.

In recent months the realization has sunk in across the country that the 2010 Dodd-Frank financial-reform legislation is a colossal mess. Yet we obviously can't go back to the status quo that produced a financial catastrophe in 2007-08. Fortunately, there is an alternative.

At its core, the recent financial crisis was a run. The run was concentrated in the "shadow banking system" of overnight repurchase agreements, asset-backed securities, broker-dealers and investment banks, but it was a classic run nonetheless.

The run made the crisis. In the 2000 tech bust, people lost a lot of money, but there was no crisis. Why not? Because tech firms were funded by stock. When stock values fall you can't run to get your money out first, and you can't take a company to bankruptcy court.

This is a vital and liberating insight: To stop future crises, the financial system needs to be reformed so that it is not prone to runs. Americans do not have to trust newly wise regulators to fix Fannie Mae and Freddie Mac, end rating-agency shenanigans, clairvoyantly spot and prick "bubbles," and address every other real or perceived shortcoming of our financial system.

Runs are a pathology of financial contracts, such as bank deposits, that promise investors a fixed amount of money and the right to withdraw that amount at any time. A run also requires that the issuing institution can't raise cash by selling assets, borrowing or issuing equity. If I see you taking your money out, then I have an incentive to take my money out too. When a run at one institution causes people to question the finances of others, the run becomes "systemic," which is practically the definition of a crisis.

By the time they failed in 2008, Lehman Brothers and Bear Stearns were funding portfolios of mortgage-backed securities with overnight debt leveraged 30 to 1. For each $1 of equity capital, the banks borrowed $30. Then, every single day, they had to borrow 30 new dollars to pay off the previous day's loans.

When investors sniffed trouble, they refused to roll over the loans. The bank's broker-dealer customers and derivatives counterparties also pulled their money out, each also having the right to money immediately, but each contract also serving as a source of short-term funding for the banks. When this short-term funding evaporated, the banks instantly failed.

Clearly, overnight debt is the problem. The solution is just as clear: Don't let financial institutions issue run-prone liabilities. Run-prone contracts generate an externality, like pollution, and merit severe regulation on that basis.

Institutions that want to take deposits, borrow overnight, issue fixed-value money-market shares or any similar runnable contract must back those liabilities 100% by short-term Treasurys or reserves at the Fed. Institutions that want to invest in risky or illiquid assets, like loans or mortgage-backed securities, have to fund those investments with equity and long-term debt. Then they can invest as they please, as their problems cannot start a crisis.

Money-market funds that want to offer better returns by investing in riskier securities must let their values float, rather than promise a fixed value of $1 per share. Mortgage-backed securities also belong in floating-value funds, like equity mutual funds or exchange-traded funds. The run-prone nature of broker-dealer and derivatives contracts can also be reformed at small cost by fixing the terms of those contracts and their treatment in bankruptcy.

The bottom line: People who want better returns must transparently shoulder additional risk.

Some people will argue: Don't we need banks to "transform maturity" and provide abundant "safe and liquid" assets for people to invest in? Not anymore.

First, $16 trillion of government debt is enough to back any conceivable demand for fixed-value liquid assets. Money-market funds that hold Treasurys can expand to enormous size. The Federal Reserve should continue to provide abundant reserves to banks, paying market interest. The Treasury could offer reserves to the rest of us—floating-rate, fixed-value, electronically-transferable debt. There is no reason that the Fed and Treasury should artificially starve the economy of completely safe, interest-paying cash.

Second, financial and technical innovations can deliver the liquidity that once only banks could provide. Today, you can pay your monthly credit-card bill from your exchange-traded stock fund. Tomorrow, your ATM could sell $100 of that fund if you want cash, or you could bump your smartphone on a cash register to buy coffee with that fund. Liquidity no longer requires that anyone hold risk-free or fixed-value assets.

Others will object: Won't eliminating short-term funding for long-term investments drive up rates for borrowers? Not much. Floating-value investments such as equity and long-term debt that go unlevered into loans are very safe and need to pay correspondingly low returns. If borrowers pay a bit more than now, it is only because banks lose their government guarantees and subsidies.

In the 19th century, private banks issued currency. A few crises later, we stopped that and gave the federal government a monopoly on currency issue. Now that short-term debt is our money, we should treat it the same way, and for exactly the same reasons.

In the wake of Great Depression bank runs, the U.S. government chose to guarantee bank deposits, so that people no longer had the incentive to get out first. But guaranteeing a bank's deposits gives bank managers a huge incentive to take risks.

So we tried to regulate the banks from taking risks. The banks got around the regulations, and "shadow banks" grew around the regulated system. Since then we have been on a treadmill of ever-larger bailouts, ever-expanding government guarantees, ever-expanding attempts to regulate risks, ever-more powerful regulators and ever-larger crises.

This approach will never work. Rather than try to regulate the riskiness of bank assets, we should fix the run-prone nature of their liabilities. Fortunately, modern financial technology surmounts the economic obstacles that impeded this approach in the 1930s. Now we only have to surmount the obstacle of entrenched interests that profit from the current dysfunctional system.

Tuesday, June 18, 2013

Two seconds

The weekend wall street journal had an interesting article about high speed trading, Traders Pay for an Early Peek at Key Data. Through Thompson-Reuters, traders can get the University of Michigan consumer confidence survey results two seconds ahead of everyone else. They then trade S&P500 ETFs on the information.

Naturally, the article was about whether this is fair and ethical, with a pretty strong sense of no (and surely pressure on the University of Michigan not to offer the service.)

It didn't ask the obvious question: Traders need willing counterparties. Knowing that this is going on, who in their right mind is leaving limit orders on the books in the two seconds before the confidence surveys come out?

OK, you say, mom and pop are too unsophisticated to know what's going on. But even mom and pop place their orders through institutions which use trading algorithms to minimize price impact. It takes one line of code to add "do not leave limit orders in place during the two seconds before the consumer confidence surveys come out."

In short, the article leaves this impression that investors are getting taken. But it's so easy to avoid being taken, so it seems a bit of a puzzle that anyone can make money at this game.

I hope readers with more market experience than I can answer the puzzle: Who is it out there that is dumb enough to leave limit orders for S&P500 ETFs outstanding in the 2 seconds before the consumer confidence surveys come out?

|

| Source: Wall Street Journal |

Naturally, the article was about whether this is fair and ethical, with a pretty strong sense of no (and surely pressure on the University of Michigan not to offer the service.)

It didn't ask the obvious question: Traders need willing counterparties. Knowing that this is going on, who in their right mind is leaving limit orders on the books in the two seconds before the confidence surveys come out?

OK, you say, mom and pop are too unsophisticated to know what's going on. But even mom and pop place their orders through institutions which use trading algorithms to minimize price impact. It takes one line of code to add "do not leave limit orders in place during the two seconds before the consumer confidence surveys come out."

In short, the article leaves this impression that investors are getting taken. But it's so easy to avoid being taken, so it seems a bit of a puzzle that anyone can make money at this game.

I hope readers with more market experience than I can answer the puzzle: Who is it out there that is dumb enough to leave limit orders for S&P500 ETFs outstanding in the 2 seconds before the consumer confidence surveys come out?

Labels:

Finance,

Thesis topics

Thursday, June 6, 2013

Two on financial reform

I recently read two interesting items in the long-running financial regulation saga.

First, a very thoughtful, clear, and succinct speech by Philadelphia Fed President Charles Plosser titled "Reducing Financial Fragility by Ending Too Big to Fail." It's interesting to see a (another?) Fed President basically say that the whole Dodd-Frank / Basel structure is wrong-headed. Two little gems:

Second, Anat Admati and Martin Hellwig have an addition to their "Banker's new clothes" book (my review), 23 Flawed Claims Debunked. Don't miss the fun footnotes. Anat and Martin get some sort of medal for patience in wading through dreck.

First, a very thoughtful, clear, and succinct speech by Philadelphia Fed President Charles Plosser titled "Reducing Financial Fragility by Ending Too Big to Fail." It's interesting to see a (another?) Fed President basically say that the whole Dodd-Frank / Basel structure is wrong-headed. Two little gems:

There is probably no better example of rule writing that violates the basic principles of simple, robust regulation than risk-weighted capital calculations.No surprise, I agree.

...

Remember that Title II resolution is available only when there are concerns about systemic risk. Just imagine the highly political issue of determining whether a firm is systemically important, especially if it has not been designated so by the Financial Stability Oversight Committee beforehand....

...Creditors will perceive that their payoffs will be determined through a regulatory resolution process, which could be influenced through political pressure rather than subject to the rule of law

Second, Anat Admati and Martin Hellwig have an addition to their "Banker's new clothes" book (my review), 23 Flawed Claims Debunked. Don't miss the fun footnotes. Anat and Martin get some sort of medal for patience in wading through dreck.

Labels:

Finance,

Regulation

Friday, May 17, 2013

More Interest-Rate Graphs

For a talk I gave a week or so ago, I made some more interest-rate graphs. This extends the last post on the subject. It also might be useful if you're teaching forward rates and expectations hypothesis.

The question: Are interest rates going up or down, especially long term rates? Investors obviously care, they want to know whether they should put money in long term bonds vs. short term bonds. As one who worries about debt and inflation, I'm also sensitive to the criticism that market rates are very low, forecasting apparently low rates for a long time. Yes, markets never see bad times coming, and markets 3 years ago got it way wrong thinking rates would be much higher than they are today (see last post) but still, markets don't seem to worry.

But rather than talk, let's look at the numbers. I start with the forward curve. The forward rate is the "market expectation" of interest rates, in that it is the rate you can contract today to borrow in the future. If you know better than the forward rate, you can make a lot of money.

Here, I unite the recent history of interest rates together with forecasts made from today's forward curve. The one year rate (red) is just today's forward curve. I find the longer rates as the average of the forward curves on those dates. Today's forward curve is the market forceast of the future forward curve too, so to find the forecast 5 year bond yield in 2020, I take the average of today's forward rates for 2020, 2021,..2024.

I found it rather surprising just how much, and how fast, markets still think interest rates will rise. (Or, perhaps, how large the risk premium is. If you know enough to ask about Q measure or P measure, you know enough to answer your own question.)

How can the forecast rise faster than the actual long term yields? Well, remember that long yields are the average of expected future short rates, and if short rates are below today's 10 year rate for 5 years, then they must be above today's 10 year rate for another 5 years. So, it's a misconception to read from today's 2% 10 year rate that markets expect interest rates to be 2% in the future. Markets expect a rise to 4% within 10 years.

The forward curve has the nice property that if interest rates follow this forecast, then returns on bonds of all maturities are always exactly the same. The higher yields of long-term bonds exactly compensate for the price declines when interest rates rise. I graphed returns on bonds of different maturities here to make that point.

The forward curve has the nice property that if interest rates follow this forecast, then returns on bonds of all maturities are always exactly the same. The higher yields of long-term bonds exactly compensate for the price declines when interest rates rise. I graphed returns on bonds of different maturities here to make that point.

So, Mr. Bond speculator, if you believe the forecast in the first graph, it makes absolutely no difference whether you buy long or short. Otherwise, decide whether you think rates will rise faster or slower than the forward curve.

Now, let's think about other scenarios. One possibility is Japan. Interest rates get stuck at zero for a decade. This would come with sclerotic growth, low inflation, and a massive increase in debt, as it has in Japan. Eventually that debt is unsustainable, but as Japan shows, it can go on quite a long time. What might that look like?

Here is a "Japan scenario." I set the one year rate to zero forever. I only changed the level of the market forecast, however, not the slope. Thus, to form the expected forward curve in 2020, I shifted today's forward curve downwards so that the 2020 rate is zero, but other rates rise above that just as they do now.

This scenario is another boon to long term bond holders. They already got two big presents. Notice the two upward camel-humps in long term rates -- those were foreasts of rate risks that didn't work out, and people who bought long term bonds made money.

In a Japan scenario that happens again. Holders of long-term government bonds rejoice at their 2% yields. They get quite nice returns, shown left, as rates fail to rise as expected and the price of their bonds rises. Until the debt bomb explodes.

OK, what if things go the other way? What would an unexpectedly large rise in interest rates look like?

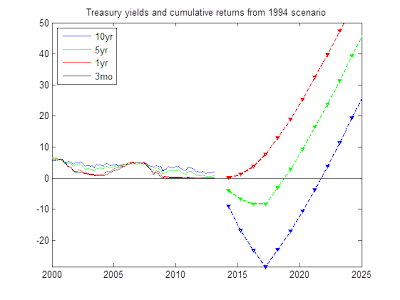

For example Feburary 1994 looked a lot like today, and then rates all of a sudden jumped up when the Fed started tightening.

To generate a 1994 style scenario from today's yields, I did the opposite of the Japan scenario. I took today's forward curve and added 1%, 2%, 3% and 4% to the one-year rate forecast. As with the Japan scenario, I shifted the whole forward curve up on those dates. We'll play with forward steepness in a moment.

Here are cumulative returns from the 1994 scenario. Long term bonds take a beating, of course. Returns all gradually rise, as interest rates rise. (These are returns to strategies that keep a constant maturity, i.e. buy a 10 year bond, sell it next year as a 9 year bond, buy a new 10 year bond, etc)

These have been fun, but I've only changed the level of the forward curve forecast, not the slope. Implicitly, I've gone along with the idea that the Fed controls everything about interest rates. If you worry, as I do, you worry that long rates can go up all on their own. Japan's 10 year rate has been doing this lately. When markets lose faith, long rates rise. Central bankers see "confidence" or "speculators" or "bubbles" or "conundrums." What does that look like?

To generate a "steepening" scenario, I imagined that markets one year from now decide that interest rates in 2017 will spike up 5 percentage points. This may be a "fear" not an "expectation," i.e. a rise in risk premium.

Then, the 5 and 10 year rates rise immediately, even though the Fed (red line) didn't do anything to the one-year rate. The bond market vigilantes stage a strike on long term debt.

Here are the consequences for cumulative returns of my steepening scenario. The long term bonds are hit much more than the shorter term bonds. This really is a bloodbath for 10 year and higher investors, leaving those under 5 years much less hurt.

So what will happen? I don't know, I don't make forecasts. But I do think it's useful to generate some vaguely coherent scenarios. The forward curve is not a rock solid this is what will happen forecast. The forward curve adds up all of these possiblities, with probabilities assigned to each, plus risk premium. There is a lot of uncertainty, and good portfolio formation starts with risk management not chasing alpha.

The question: Are interest rates going up or down, especially long term rates? Investors obviously care, they want to know whether they should put money in long term bonds vs. short term bonds. As one who worries about debt and inflation, I'm also sensitive to the criticism that market rates are very low, forecasting apparently low rates for a long time. Yes, markets never see bad times coming, and markets 3 years ago got it way wrong thinking rates would be much higher than they are today (see last post) but still, markets don't seem to worry.

But rather than talk, let's look at the numbers. I start with the forward curve. The forward rate is the "market expectation" of interest rates, in that it is the rate you can contract today to borrow in the future. If you know better than the forward rate, you can make a lot of money.

Here, I unite the recent history of interest rates together with forecasts made from today's forward curve. The one year rate (red) is just today's forward curve. I find the longer rates as the average of the forward curves on those dates. Today's forward curve is the market forceast of the future forward curve too, so to find the forecast 5 year bond yield in 2020, I take the average of today's forward rates for 2020, 2021,..2024.

I found it rather surprising just how much, and how fast, markets still think interest rates will rise. (Or, perhaps, how large the risk premium is. If you know enough to ask about Q measure or P measure, you know enough to answer your own question.)

How can the forecast rise faster than the actual long term yields? Well, remember that long yields are the average of expected future short rates, and if short rates are below today's 10 year rate for 5 years, then they must be above today's 10 year rate for another 5 years. So, it's a misconception to read from today's 2% 10 year rate that markets expect interest rates to be 2% in the future. Markets expect a rise to 4% within 10 years.

The forward curve has the nice property that if interest rates follow this forecast, then returns on bonds of all maturities are always exactly the same. The higher yields of long-term bonds exactly compensate for the price declines when interest rates rise. I graphed returns on bonds of different maturities here to make that point.

The forward curve has the nice property that if interest rates follow this forecast, then returns on bonds of all maturities are always exactly the same. The higher yields of long-term bonds exactly compensate for the price declines when interest rates rise. I graphed returns on bonds of different maturities here to make that point.So, Mr. Bond speculator, if you believe the forecast in the first graph, it makes absolutely no difference whether you buy long or short. Otherwise, decide whether you think rates will rise faster or slower than the forward curve.

Now, let's think about other scenarios. One possibility is Japan. Interest rates get stuck at zero for a decade. This would come with sclerotic growth, low inflation, and a massive increase in debt, as it has in Japan. Eventually that debt is unsustainable, but as Japan shows, it can go on quite a long time. What might that look like?

Here is a "Japan scenario." I set the one year rate to zero forever. I only changed the level of the market forecast, however, not the slope. Thus, to form the expected forward curve in 2020, I shifted today's forward curve downwards so that the 2020 rate is zero, but other rates rise above that just as they do now.

This scenario is another boon to long term bond holders. They already got two big presents. Notice the two upward camel-humps in long term rates -- those were foreasts of rate risks that didn't work out, and people who bought long term bonds made money.

In a Japan scenario that happens again. Holders of long-term government bonds rejoice at their 2% yields. They get quite nice returns, shown left, as rates fail to rise as expected and the price of their bonds rises. Until the debt bomb explodes.

OK, what if things go the other way? What would an unexpectedly large rise in interest rates look like?

For example Feburary 1994 looked a lot like today, and then rates all of a sudden jumped up when the Fed started tightening.

To generate a 1994 style scenario from today's yields, I did the opposite of the Japan scenario. I took today's forward curve and added 1%, 2%, 3% and 4% to the one-year rate forecast. As with the Japan scenario, I shifted the whole forward curve up on those dates. We'll play with forward steepness in a moment.

Here are cumulative returns from the 1994 scenario. Long term bonds take a beating, of course. Returns all gradually rise, as interest rates rise. (These are returns to strategies that keep a constant maturity, i.e. buy a 10 year bond, sell it next year as a 9 year bond, buy a new 10 year bond, etc)

These have been fun, but I've only changed the level of the forward curve forecast, not the slope. Implicitly, I've gone along with the idea that the Fed controls everything about interest rates. If you worry, as I do, you worry that long rates can go up all on their own. Japan's 10 year rate has been doing this lately. When markets lose faith, long rates rise. Central bankers see "confidence" or "speculators" or "bubbles" or "conundrums." What does that look like?

To generate a "steepening" scenario, I imagined that markets one year from now decide that interest rates in 2017 will spike up 5 percentage points. This may be a "fear" not an "expectation," i.e. a rise in risk premium.

Then, the 5 and 10 year rates rise immediately, even though the Fed (red line) didn't do anything to the one-year rate. The bond market vigilantes stage a strike on long term debt.

Here are the consequences for cumulative returns of my steepening scenario. The long term bonds are hit much more than the shorter term bonds. This really is a bloodbath for 10 year and higher investors, leaving those under 5 years much less hurt.

So what will happen? I don't know, I don't make forecasts. But I do think it's useful to generate some vaguely coherent scenarios. The forward curve is not a rock solid this is what will happen forecast. The forward curve adds up all of these possiblities, with probabilities assigned to each, plus risk premium. There is a lot of uncertainty, and good portfolio formation starts with risk management not chasing alpha.

Labels:

Finance,

Inflation,

Monetary Policy,

Talks

Wednesday, May 8, 2013

Finance: Function Matters, not Size

"Finance: Function Matters, not Size" This is the new title of the published version of "The Size of Finance," which I posted on this blog here as a working paper. If you enjoyed the original, here is the better final version. If you didn't, here it is all fresh and new.

Published version (my webpage)

Published version (Journal of Economic Perspectives)

Growth of the Financial Sector" symposium (Journal of Economic Perspectives)

Published version (my webpage)

Published version (Journal of Economic Perspectives)

Growth of the Financial Sector" symposium (Journal of Economic Perspectives)

Saturday, May 4, 2013

Floating-rate Treasury debt

Last week the Treasury announced that is it going ahead with floating-rate debt, and gave some details how it will work. The Wall Street Journal coverage is here and the Treasury announcement is here. I wrote about the issue at some length last fall, here (And freely admit some of today's essay repeats points made there.)

Right now, the Treasury rolls over a lot of debt. For example, about one and a half trillion dollars is in the form of Treasury bills, which mature in less than a year. So, every year, the Treasury sells one and a half trillion dollars of new bills, which it uses to pay off one and a half trillion dollars of old bills.

With floating-rate debt, all the buying and selling is avoided. Instead, the Treasury periodically sets a new rate, so that the floating rate security has value one dollar (just as the maturing bill would have). It's the same thing, except the bill sits in the investor's pocket.

When interest rates go up, fixed-coupon long term debt falls in price. Floating-rate debt does not -- the interest payment goes up, so that the value of the debt is stable. Thus, even though its maturity may be long -- the Treasury mentioned two years -- the value of the debt is as safe as that of very short-term debt.

Homeowners are familiar with the system. An adjustable rate mortgage works the same way. You could take out a one-year balloon mortgage and refinance every year. But the adjustable rate is a lot simpler .

Now, I'm usually a Modigliani-Miller fan, and from the point of view of frictionless finance, it doesn't make any difference whether the Treasury has floating rate debt or rolls over debt. So why do I like this so much?

As a minor benefit, floating rate debt is just a little bit safer. It is possible that markets refuse to roll over debt, leaving the Treasury technically insolvent, unable to pay the principal on its existing debt. This is basically what happened in Greece. With floating rate debt, the Treasury would still face a crisis, unable to borrow new money, and forced next month to pay huge interest on the floating rate debt. But at least the crisis would be averted a bit and not result in immediate default. This is a very unlikely circumstance, you say, and I agree, but we all thought the last financial crisis was unlikely too until it happened.

A deeper benefit, I think, is that floating-rate Treasury debt opens the way to a run-free financial system. I want huge capital requirements on banks for all assets except floating-rate or short term Treasury debt. And I don't like runs in money market funds.

The usual answer is, "that's nice, but people need lots of safe securities. We need banks and money market funds holding risky securities to intermediate to provide the vast amount of safe securities people want. You have to put up with runs, TBTF guarantees, massive regulation and so on."

Well, suppose the Treasury were to fund itself entirely by floating rate debt. We could have $11 trillion of floating-rate debt, generating $11 trillion of perfectly safe money-market fund assets. We can be awash in fixed-value assets, and could happily ban the rest, largely eliminating run-prone assets from the financial system.

(What about the interest rate risk, you ask? Didn't I just advocate the opposite, that Treasury needs to issue longer-term debt? Yes, but the Treasury can swap out the interest rate risk with fixed-for-floating swaps. Who will buy those swaps? The same people who are buying long-term Treasuries now. Of course, I also suspect that in addition to the Fed's $3 trillion of interest-paying reserves, which are also floating-rate US government debt, the demand will not be more than a few trillion.)

My complaint though (you knew I'd complain, no?) is that Treasury did not go far enough. The proposal says that the Treasury will pay a rate determined by an index,

I would much rather that the Treasury pegged the value to exactly $100 at all times, buying and selling at that price between auctions, and using direct auctions to reset the rate every month or so.

Why? The alleged "safe asset" demand is for assets with absolutely fixed value. This is why money market funds and their customers are howling about proposals to let values float. With these floating-rate bonds as assets, we will still need money market funds or narrow banks to intermediate, and provide assets with truly fixed values, wiping out a few tens of precious basis points for the end customer in the process (and tempting that customer to "reach for yield" and get us back in to trouble again).

If the Treasury issued fixed-value floating-rate debt, in small denominations, electronically transferable, we might not need such funds at all. Or, they would have to compete on providing better IT services for retail customers (likely!) rather than managing the small price fluctuations of floating-rate Treasury assets. The Treasury says it wants to broaden the investor base. Well, the "Treasury money market fund" open to retail investors like you and me is the broadest base it can imagine!

The Treasury may be deliberately avoiding this outcome, to keep money market funds in business (i.e. to provide them with a little artificial profit), as the Fed kept the Federal funds rate 10 bp or so above zero to keep such funds in business. But if funds need an artificially hobbled government security to stay in business, we should let them vanish.

I think the Treasury should also make them perpetual. The proposal says the floaters will have a two-year maturity, forcing owners to cash in principal and buy new again, and Treasury to refinance. Why? Why not make this just like a money market account -- floating rate perpetuities? Big enough surpluses so that the Treasury has to buy back perpetuities are a long way away!

In sum, A- Treasury! But, instead of indexing, fix the price at $100 at all times, using an auction to reset rates; make them available in small denominations to regular investors with easy electronic transfers; and lengthen the maturity. A lot.

Then we can start clamping down on all the stuff that blew up in 2008.

Right now, the Treasury rolls over a lot of debt. For example, about one and a half trillion dollars is in the form of Treasury bills, which mature in less than a year. So, every year, the Treasury sells one and a half trillion dollars of new bills, which it uses to pay off one and a half trillion dollars of old bills.

With floating-rate debt, all the buying and selling is avoided. Instead, the Treasury periodically sets a new rate, so that the floating rate security has value one dollar (just as the maturing bill would have). It's the same thing, except the bill sits in the investor's pocket.

When interest rates go up, fixed-coupon long term debt falls in price. Floating-rate debt does not -- the interest payment goes up, so that the value of the debt is stable. Thus, even though its maturity may be long -- the Treasury mentioned two years -- the value of the debt is as safe as that of very short-term debt.

Homeowners are familiar with the system. An adjustable rate mortgage works the same way. You could take out a one-year balloon mortgage and refinance every year. But the adjustable rate is a lot simpler .

Now, I'm usually a Modigliani-Miller fan, and from the point of view of frictionless finance, it doesn't make any difference whether the Treasury has floating rate debt or rolls over debt. So why do I like this so much?

As a minor benefit, floating rate debt is just a little bit safer. It is possible that markets refuse to roll over debt, leaving the Treasury technically insolvent, unable to pay the principal on its existing debt. This is basically what happened in Greece. With floating rate debt, the Treasury would still face a crisis, unable to borrow new money, and forced next month to pay huge interest on the floating rate debt. But at least the crisis would be averted a bit and not result in immediate default. This is a very unlikely circumstance, you say, and I agree, but we all thought the last financial crisis was unlikely too until it happened.

A deeper benefit, I think, is that floating-rate Treasury debt opens the way to a run-free financial system. I want huge capital requirements on banks for all assets except floating-rate or short term Treasury debt. And I don't like runs in money market funds.

The usual answer is, "that's nice, but people need lots of safe securities. We need banks and money market funds holding risky securities to intermediate to provide the vast amount of safe securities people want. You have to put up with runs, TBTF guarantees, massive regulation and so on."

Well, suppose the Treasury were to fund itself entirely by floating rate debt. We could have $11 trillion of floating-rate debt, generating $11 trillion of perfectly safe money-market fund assets. We can be awash in fixed-value assets, and could happily ban the rest, largely eliminating run-prone assets from the financial system.

(What about the interest rate risk, you ask? Didn't I just advocate the opposite, that Treasury needs to issue longer-term debt? Yes, but the Treasury can swap out the interest rate risk with fixed-for-floating swaps. Who will buy those swaps? The same people who are buying long-term Treasuries now. Of course, I also suspect that in addition to the Fed's $3 trillion of interest-paying reserves, which are also floating-rate US government debt, the demand will not be more than a few trillion.)

My complaint though (you knew I'd complain, no?) is that Treasury did not go far enough. The proposal says that the Treasury will pay a rate determined by an index,

We have decided to use the weekly High Rate of 13-week Treasury bill auctions, which was described in the ANPR, as the index for Treasury FRNs.This means that the price of the floating-rate notes will deviate somewhat from par ($100.)

I would much rather that the Treasury pegged the value to exactly $100 at all times, buying and selling at that price between auctions, and using direct auctions to reset the rate every month or so.

Why? The alleged "safe asset" demand is for assets with absolutely fixed value. This is why money market funds and their customers are howling about proposals to let values float. With these floating-rate bonds as assets, we will still need money market funds or narrow banks to intermediate, and provide assets with truly fixed values, wiping out a few tens of precious basis points for the end customer in the process (and tempting that customer to "reach for yield" and get us back in to trouble again).

If the Treasury issued fixed-value floating-rate debt, in small denominations, electronically transferable, we might not need such funds at all. Or, they would have to compete on providing better IT services for retail customers (likely!) rather than managing the small price fluctuations of floating-rate Treasury assets. The Treasury says it wants to broaden the investor base. Well, the "Treasury money market fund" open to retail investors like you and me is the broadest base it can imagine!

The Treasury may be deliberately avoiding this outcome, to keep money market funds in business (i.e. to provide them with a little artificial profit), as the Fed kept the Federal funds rate 10 bp or so above zero to keep such funds in business. But if funds need an artificially hobbled government security to stay in business, we should let them vanish.

I think the Treasury should also make them perpetual. The proposal says the floaters will have a two-year maturity, forcing owners to cash in principal and buy new again, and Treasury to refinance. Why? Why not make this just like a money market account -- floating rate perpetuities? Big enough surpluses so that the Treasury has to buy back perpetuities are a long way away!

In sum, A- Treasury! But, instead of indexing, fix the price at $100 at all times, using an auction to reset rates; make them available in small denominations to regular investors with easy electronic transfers; and lengthen the maturity. A lot.

Then we can start clamping down on all the stuff that blew up in 2008.

Wednesday, April 10, 2013

Interest rate graphs

Where are interest rates going? Here are two fun graphs I made, for a talk I gave Tuesday at Grant's spring conference, on this question. (Full slide deck here or from link on my webpage here)

Here is a graph of the recent history of interest rates. (These are constant maturity Treasury yields from the Fed) You can see the pattern:

Early in a recession, interest rates fall, but long rates stay above short rates. These are great times for holders of long-term bonds. They get higher yields, but prices also rise as rates fall, so they make money both ways. But you also see the see-saws. Interpretation: long-term bond holders are getting a premium for holding interest rate risk at a time that nobody wants to hold risks.

Then there is the flat part at the bottom of the recession. Now long-term bond holders get the yield, but interest rates don't change. Still, they're making money.

Then comes the interest rate rise, when long-term bond holders lose money. Obviously, you want to get out before interest rates start rising. But it's not easy. Nobody knows for sure how long recessions will last. (That seems to be the lesson of more serious work too.) Look at all the fits and starts, all the zig zags in long interest rates. You don't want to be caught napping like in 1994. But if you, like me, thought last year or the year before looked like 1994, you got out too early. Welcome to risk and return. Notice in 2003 that long rates started rising long before the Fed did anything.

As I look at the fundamentals, current rates look pretty low. Will inflation really average less than 3% for the next 30 years, so you just break even on 30 year bonds? But the criticism, "if we're in such trouble, why don't markets see it coming?" is still troublesome. So let's look at the actual market forecast

The solid blue line and red line are today's yield curve and forward curve. (This is the Gürkaynak, Sack, and Wright data). The blue forward curve is the market expectation of where interest rates will go in the future. You can lock in these rates today, so if you really know something different is going to happen, you can make a fortune. (This is why I'm not persuaded by arguments that the Fed is driving down rates below market expectations.) We can interpret this blue forward curve by the "consensus forecast." The economy slowly recovers, interest rates slowly rise back to normal levels (4%) consistent with 2% inflation and 2% real rates. The fall back to 3% rates at the long end of the curve seems a bit low, but that's the market forecast and always a good place to start prognosticating.

If the path of future interest rates follows the blue forward curve, there is no bloodbath in long term bonds: you earn this rate of return on bonds of all maturity at every date going forward. (Proving this is a good finance class problem.)

How good are market forecasts though? This may be the market's best guess, but a lot of the future is simply unknowable. The thin blue and red lines show the forward curve and yield curve in April 2010. You can see that at the time, the consensus market forecast was for interest rates to rise starting sooner, and to rise more quickly. We all expected the recession to end quickly, as recessions usually do.

In 2010, the market forecast that today's interest rate would be 3.5%, not zero. I graphed that forecast and realization by the leftmost vertical arrow. Furthermore, the entire forward curve forecasts the entire forward curve. So, second point from the left, in 2010 the market forecast that today's one-year forward rate would be about 4.2%, not the tenth of a percent or so that we see. If the forward rate forecast is correct, today's forward rate curve should lie exactly on the 2010 forward curve. (Proving that is another nice problem set question for finance classes.)

So, from the perspective of 2010, we have seen quite a large, surprise, downward shift of the "market expectations" in the forward curve. It has been a great few years for holders of long term bonds.

However, beware: What goes down can come up again. To those who say "interest rates are low, the market doesn't see trouble coming, why worry?" I think the graph shows the magnitude of interest rate risk. And risk goes in both directions.

So, I'm still doom and gloomy. But the danger we face is unpredictable. Large debts mean that the government is out of ammunition, didn't pay the insurance bill, the fire extinguishers are empty, we are prone to a run or a sovereign debt "bubble" bursting (I hate that word, but I think it conveys the spirit), choose your anecdote. We could have a Japanese decade. It could be February 1994, when spreads and recent history looked a lot like today's. Or we could be on the edge of Greece, whose interest rates were pretty low once upon a time as well. The market forecast interpolates between these options.

The first principle of portfolio maximization is risk management, not prognostication. There remains a lot of risk.

Here is a graph of the recent history of interest rates. (These are constant maturity Treasury yields from the Fed) You can see the pattern:

Early in a recession, interest rates fall, but long rates stay above short rates. These are great times for holders of long-term bonds. They get higher yields, but prices also rise as rates fall, so they make money both ways. But you also see the see-saws. Interpretation: long-term bond holders are getting a premium for holding interest rate risk at a time that nobody wants to hold risks.

Then there is the flat part at the bottom of the recession. Now long-term bond holders get the yield, but interest rates don't change. Still, they're making money.

Then comes the interest rate rise, when long-term bond holders lose money. Obviously, you want to get out before interest rates start rising. But it's not easy. Nobody knows for sure how long recessions will last. (That seems to be the lesson of more serious work too.) Look at all the fits and starts, all the zig zags in long interest rates. You don't want to be caught napping like in 1994. But if you, like me, thought last year or the year before looked like 1994, you got out too early. Welcome to risk and return. Notice in 2003 that long rates started rising long before the Fed did anything.

As I look at the fundamentals, current rates look pretty low. Will inflation really average less than 3% for the next 30 years, so you just break even on 30 year bonds? But the criticism, "if we're in such trouble, why don't markets see it coming?" is still troublesome. So let's look at the actual market forecast

The solid blue line and red line are today's yield curve and forward curve. (This is the Gürkaynak, Sack, and Wright data). The blue forward curve is the market expectation of where interest rates will go in the future. You can lock in these rates today, so if you really know something different is going to happen, you can make a fortune. (This is why I'm not persuaded by arguments that the Fed is driving down rates below market expectations.) We can interpret this blue forward curve by the "consensus forecast." The economy slowly recovers, interest rates slowly rise back to normal levels (4%) consistent with 2% inflation and 2% real rates. The fall back to 3% rates at the long end of the curve seems a bit low, but that's the market forecast and always a good place to start prognosticating.

If the path of future interest rates follows the blue forward curve, there is no bloodbath in long term bonds: you earn this rate of return on bonds of all maturity at every date going forward. (Proving this is a good finance class problem.)

How good are market forecasts though? This may be the market's best guess, but a lot of the future is simply unknowable. The thin blue and red lines show the forward curve and yield curve in April 2010. You can see that at the time, the consensus market forecast was for interest rates to rise starting sooner, and to rise more quickly. We all expected the recession to end quickly, as recessions usually do.

In 2010, the market forecast that today's interest rate would be 3.5%, not zero. I graphed that forecast and realization by the leftmost vertical arrow. Furthermore, the entire forward curve forecasts the entire forward curve. So, second point from the left, in 2010 the market forecast that today's one-year forward rate would be about 4.2%, not the tenth of a percent or so that we see. If the forward rate forecast is correct, today's forward rate curve should lie exactly on the 2010 forward curve. (Proving that is another nice problem set question for finance classes.)

So, from the perspective of 2010, we have seen quite a large, surprise, downward shift of the "market expectations" in the forward curve. It has been a great few years for holders of long term bonds.

However, beware: What goes down can come up again. To those who say "interest rates are low, the market doesn't see trouble coming, why worry?" I think the graph shows the magnitude of interest rate risk. And risk goes in both directions.

So, I'm still doom and gloomy. But the danger we face is unpredictable. Large debts mean that the government is out of ammunition, didn't pay the insurance bill, the fire extinguishers are empty, we are prone to a run or a sovereign debt "bubble" bursting (I hate that word, but I think it conveys the spirit), choose your anecdote. We could have a Japanese decade. It could be February 1994, when spreads and recent history looked a lot like today's. Or we could be on the edge of Greece, whose interest rates were pretty low once upon a time as well. The market forecast interpolates between these options.

The first principle of portfolio maximization is risk management, not prognostication. There remains a lot of risk.

Labels:

Commentary,

Finance,

Inflation,

Monetary Policy

Saturday, January 19, 2013

Is Finance Too Big?

Is finance too big? Here's a draft essay on the subject. There is a pdf on my webpage, and updates, revisions and a final version will end up there.

This came about as a "response essay" to Robin Greenwood and David Scharfstein's "The growth of modern finance" for the Journal of Economic Perspectives. That's why Robin and David are the target of a lot of criticism. But they're really just standing in for a lot of opinion that finance is "too big," in part because they did such a good and evenhanded job of synthesizing that point of view. So, sorry for picking on you, Robin and David!

I'm sure the JEP will make me cut it down and tone it down, so this is the fun first draft.

Is Finance Too Big?

John H. Cochrane [1],[2]

January 7 2013

I. Introduction

The US spends $150 billion a year on advertising and marketing[3]. $150 billion, just to trick people into buying stuff they don’t need. What a waste.

There are 2.2 people doing medical billing for every doctor that actually sees patients, costing $360 billion[4] -- 2.4% of GDP. Talk about “too big!”

Wholesale, retail trade and transportation cost 14.6% of GDP, while all manufacturing is only 11.5% of GDP. We spend more to move stuff around than to make it!

A while ago, my wife asked me to look at light fixtures. Have you seen how many thousands of different kinds of light fixtures there are? The excess complexity is insane. Ten ought to be plenty.

It’s ridiculous how much people overpay for brand names when they can get the generic a lot cheaper. They must be pretty naive.

Business school finance professors are horribly overpaid. Ask an anthropologist! We must really have snowballed university administrations to get paid nearly half a million bucks, and work a grand total of 10 weeks a year, all to teach students that there is no alpha to be made in the stock market.

Did you know that Kim Kardashian gets $600,000 just to show up at a nightclub in Vegas? How silly is that?

It’s a lot of fun to pass judgment on “social benefits,” “size,” “complexity” of industry, and “excessive compensation” of people who get paid more than we do, isn’t it? But it isn’t really that productive either.

As economists we have a structure for thinking about these questions.

We start with the first welfare theorem: loosely, supply, demand and competition lead to socially beneficial arrangements. Yet the world around often doesn’t obviously conform to simple supply and demand arguments. See above. Then, we embark on a three-pronged investigation: First, maybe there is something about the situation we don’t understand. Durable institutions and arrangements, despite competition and lack of government interference, sometimes take us years to understand. Second, maybe there is a “market failure,” an externality, public good, natural monopoly, asymmetric information, or missing market, that explains our puzzle. Third, we often discover a “government failure,” that the puzzling aspect of our world is an unintended consequence of law or regulation. The regulators got captured, the market innovated around a regulation, or legal restrictions stop supply and demand from working.

Once we understand a puzzle, we are in a position to begin to diagnose a divergence between reality and socially desirable outcomes, and we can start to think of how to improve the outcome. But, though “some” may the world puzzling, or “many” might “voice concerns,” we don’t pronounce until we understand how one of these mechanisms describes the situation. Quantitatively: Cocktail-party externalities need not apply.

“I don’t understand it” doesn’t mean “it’s bad.” And since that attitude pervades regulation in general and financial regulation in particular, we do the world a disservice if we echo it.

I belabor this point, because I do not offer a competing black box. I don’t claim to estimate the socially-optimal “size of finance” at 8.267% of GDP, so there. Though apparently rhetorically effective, this is simply not how we do economics. After all, if a bunch of academics could sit around our offices and decide which industries were “too big,” which ones were “too small,” and close our papers with “policy recommendations” to remedy the matter, central planning would have worked. A little Hayekian modesty suggests we focus on documenting the distortions, not pronouncing on optimal industry sizes. Documenting distortions has also been, historically, far more productive than pronouncing on the optimal size of industries, optimal compensation of executives, “global imbalances,” “savings gluts,” “excessive consumption,” or other outcomes.